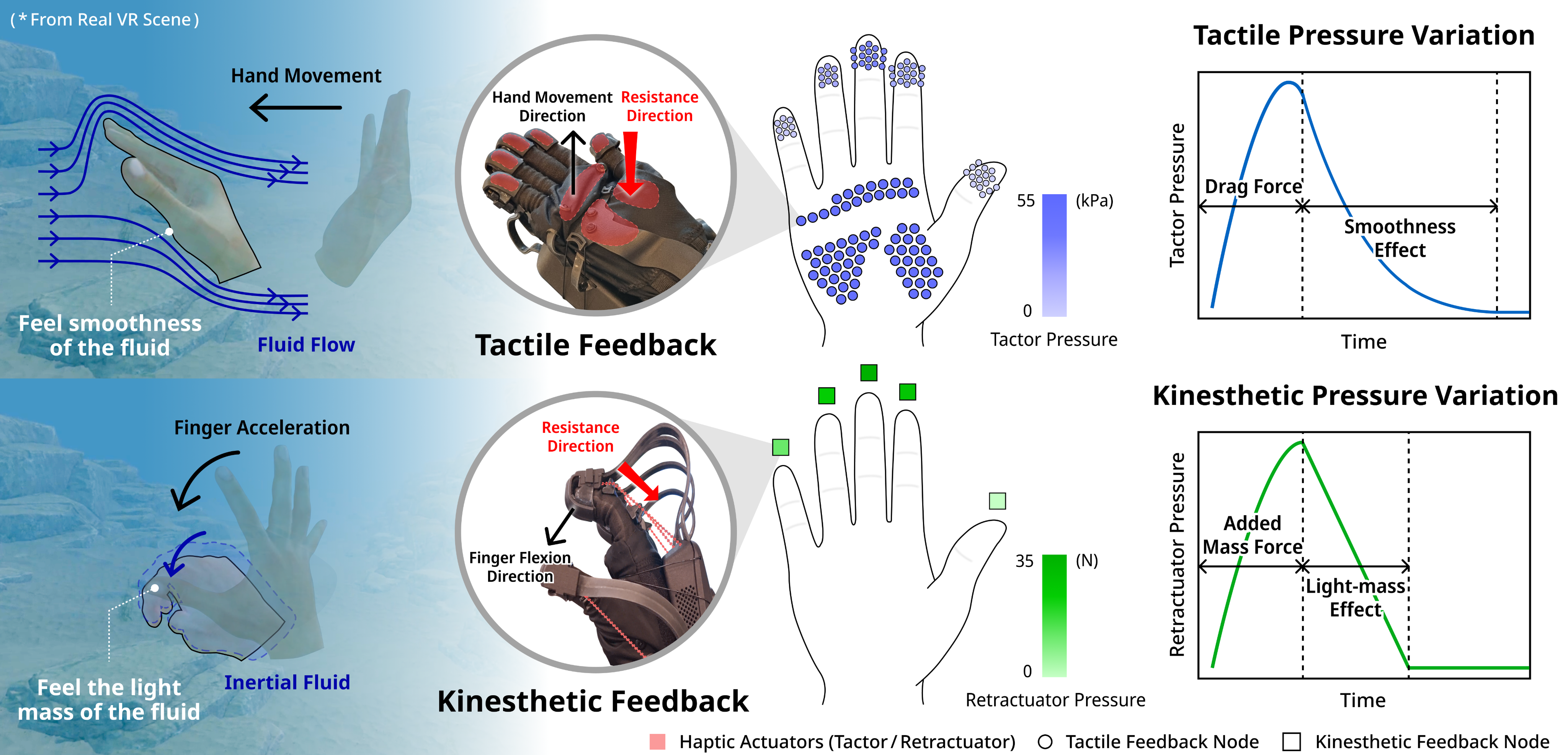

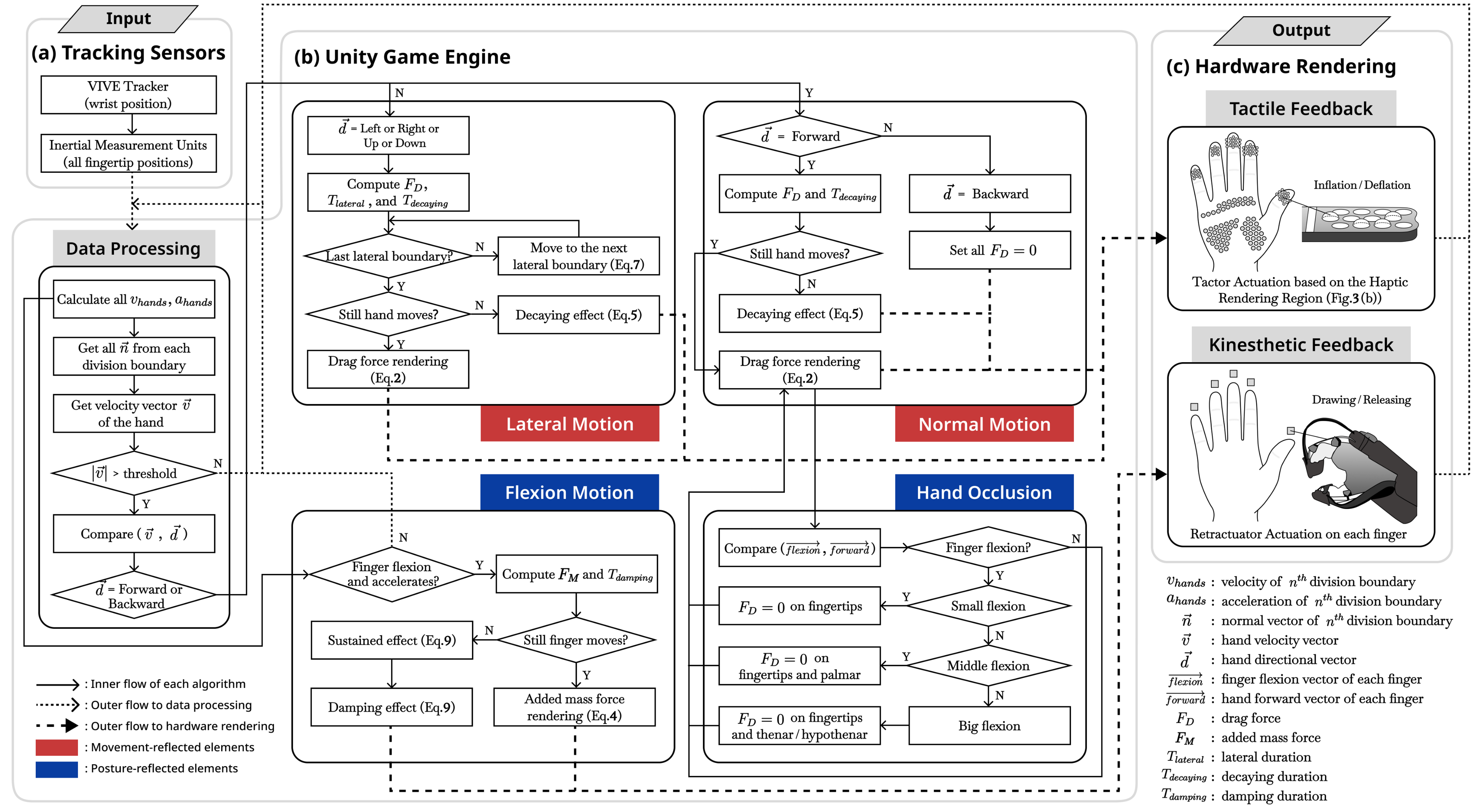

With the advancement of haptic interfaces, recent studies have focused on enabling detailed haptic experiences in virtual reality (VR), such as fluid-haptic interaction. However, rendering forces from fluid contact often causes a high-cost computation. Given that motion-induced fluid feedback is crucial to the overall experience, we focus on hand-perceivable forces to enhance underwater haptic sensation by achieving high-fidelity rendering while considering human perceptual capabilities. We present a new multimodal (tactile and kinesthetic) haptic rendering pipeline. Here, we employ drag and added mass forces by dynamically adapting to the user's hand movement and posture with pneumatic-based haptic gloves. We defined decaying and damping effects to indicate fluid properties caused by inertia and confirmed their significant perceptual impacts compared to using only physics-based equations in a perception study. By modulating pressure variations, we reproduced fluid smoothness via exponential tactile deflation and light fluid mass via linear kinesthetic feedback. Our pipeline enabled richer and more immersive VR underwater experiences by accounting for precise hand regions and motion diversity.

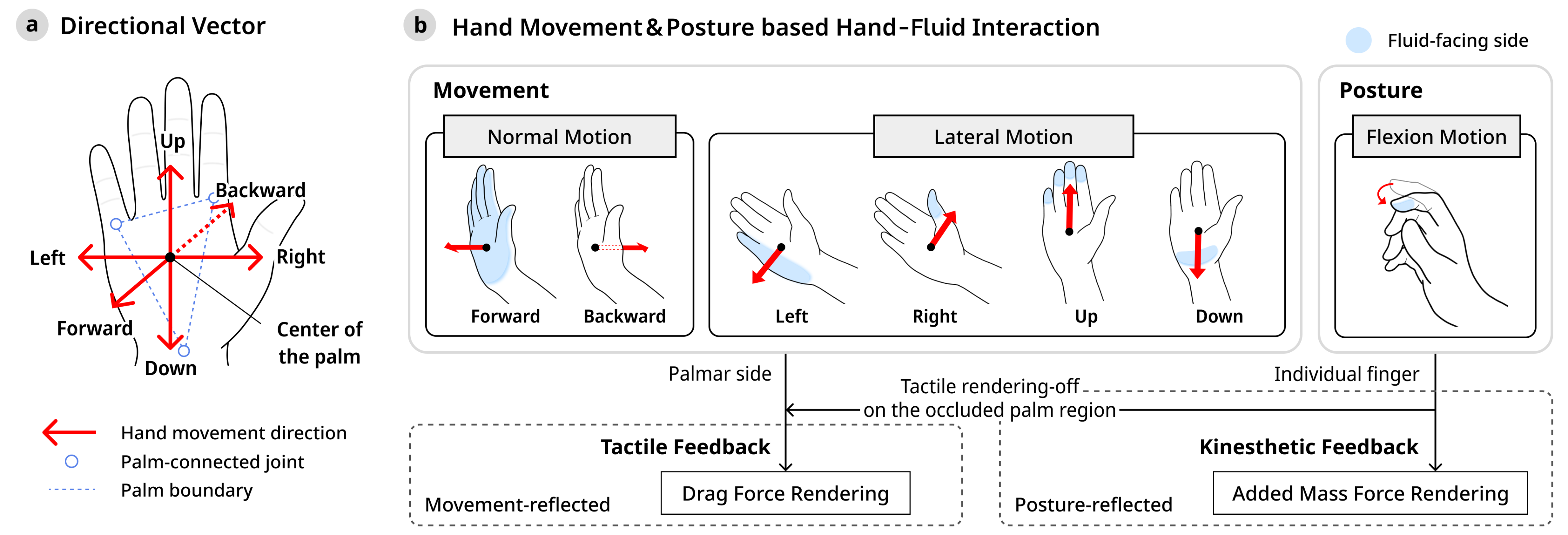

We present the working principle and system overview of AquaHaptics, defining directional vectors for hand movements and a hierarchy of hand–fluid interactions based on hand movement and posture.

We explored the size and shape of phased arrays and distance range, enabling effective finger-level wearable UMH by simulating the spatial distribution of acoustic radiation pressure. We propose the overall haptic rendering pipeline where hand positions are acquired from tracking sensors, followed by motion-dependent fluid force computation and rendering multimodal haptic feedback through actuators.

To establish a perceptual reference for real-world water interactions, participants first immersed one hand in a water tank and performed repetitive motions, focusing on water pressure sensations above the palm prior to the VR study.

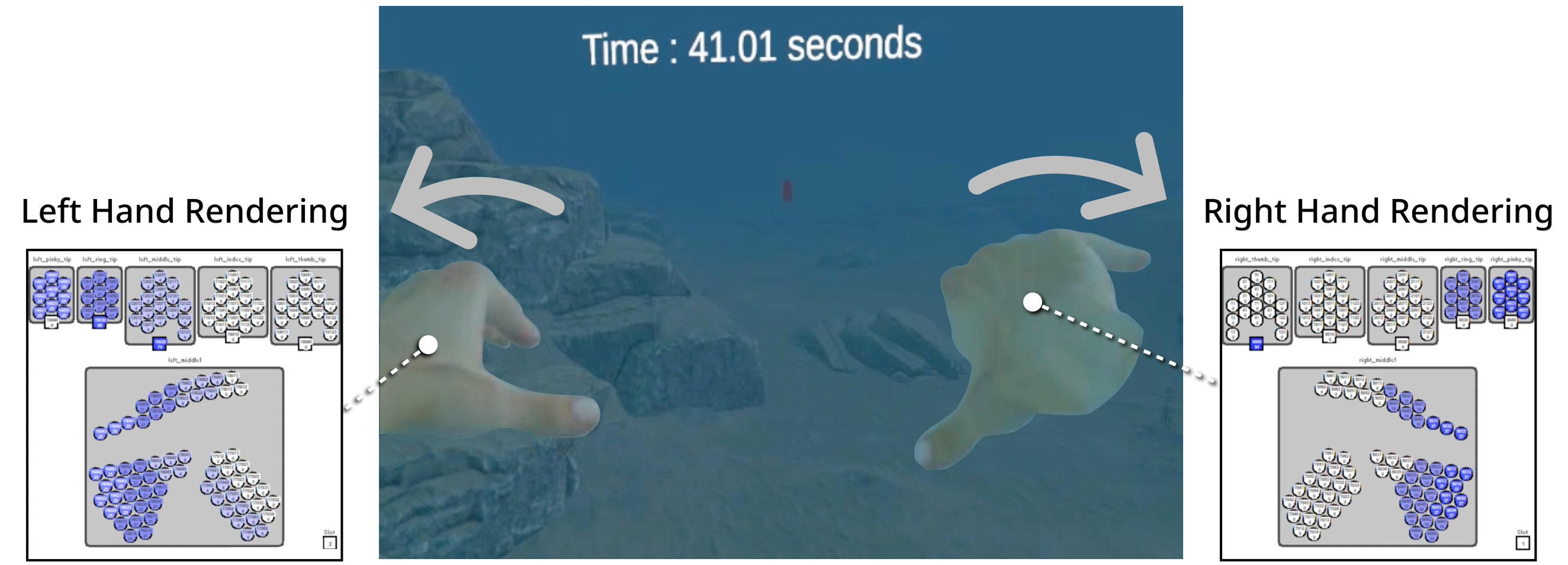

We present an underwater swimming scenario from the application study, in which users interact using both hands.

@ARTICLE{11359085,

author={Yang, Soyeong and Yoon, Sang Ho},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={AquaHaptics: Hand-based Multimodal Haptic Interactions for Immersive Virtual Underwater Experience},

year={2026},

volume={},

number={},

pages={1-17},

keywords={Fluids;Haptic interfaces;Hands;Rendering (computer graphics);Drag;Force;Pipelines;Friction;Tactile sensors;Surface resistance;Human-computer interaction;Fluid-haptic Rendering;Human perception;Virtual reality},

doi={10.1109/TVCG.2026.3652832}}